Context Engineering: Prompt engineer 2.0

Context Engineering: Why It’s the New Prompt Engineering and why it is important.

Introduction

Bonjourno, fellow coders—I’m Malik, the experimenter at Code Meet AI, where we hack AI to boost our dev game.

The term Vibe coding was introduced by AI Research Andrej Karpathy last February to talk about the idea of using AI tools to generate code. The vibe coding then was famous among software engineers, but it just didn’t have a name yet.

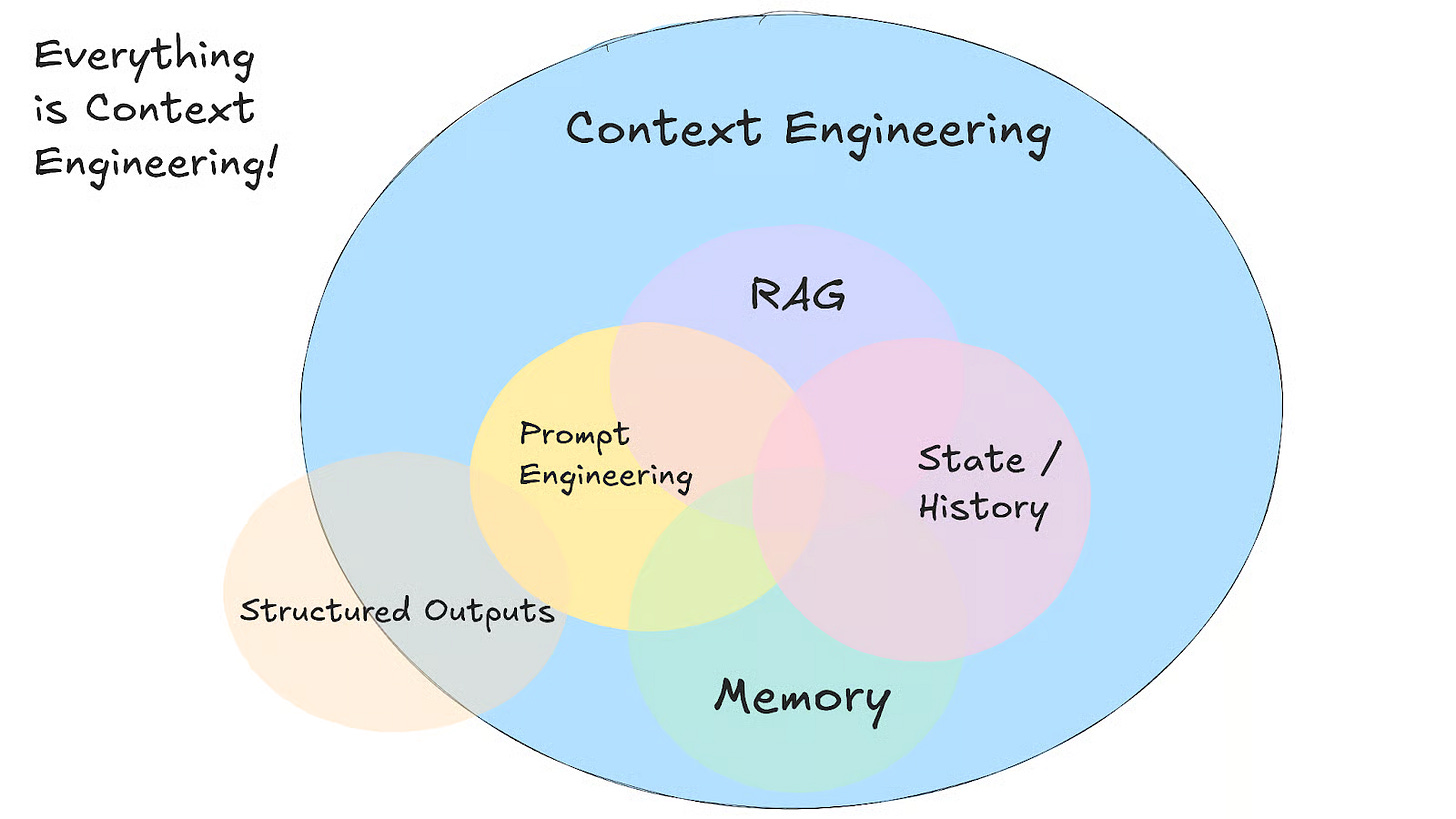

Same with Context Engineering, the idea is that when you usually prompt an LLM, either for a technical thing or not, you always try to add as much information as possible; this is called context.

Adding a Memory bank, as I explained to you before, is one of the best ways to Context engineer your LLM coding tool. It will know what you are working on, keep track of indexing the files and what is going on, and for each iteration, it will check the progress and log the changes.

In this tutorial, I will go deeper into what Context Engineering is, why it is important, and how you can achieve that with a real example in the next article.

What Is Context Engineering?

Context Engineering is the craft of shaping the information environment an AI system uses to reason, decide, and act.

It’s not about prompting better: it’s about architecting understanding.

In practice, it means managing:

Memory and context windows: what the model sees and remembers.

System instructions: its role, goals, and tone.

Retrieval systems: what data is dynamically fed in.

Session state: continuity across interactions.

Data hierarchy: deciding what’s persistent, relevant, or ephemeral.

Why Context Engineering Matters

Reduces AI Failures: Most agent failures aren’t model failures.

Ensures Consistency: AI follows your project patterns and conventions

Enables Complex Features: AI can handle multi-step implementations with proper context

Self-Correcting: Validation loops allow AI to fix its own mistakes

Context Engineering vs Prompt engineering:

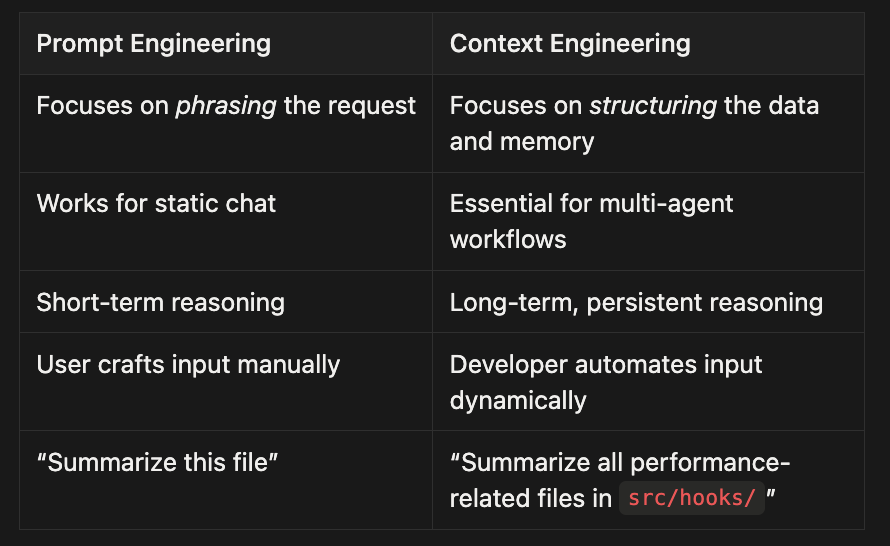

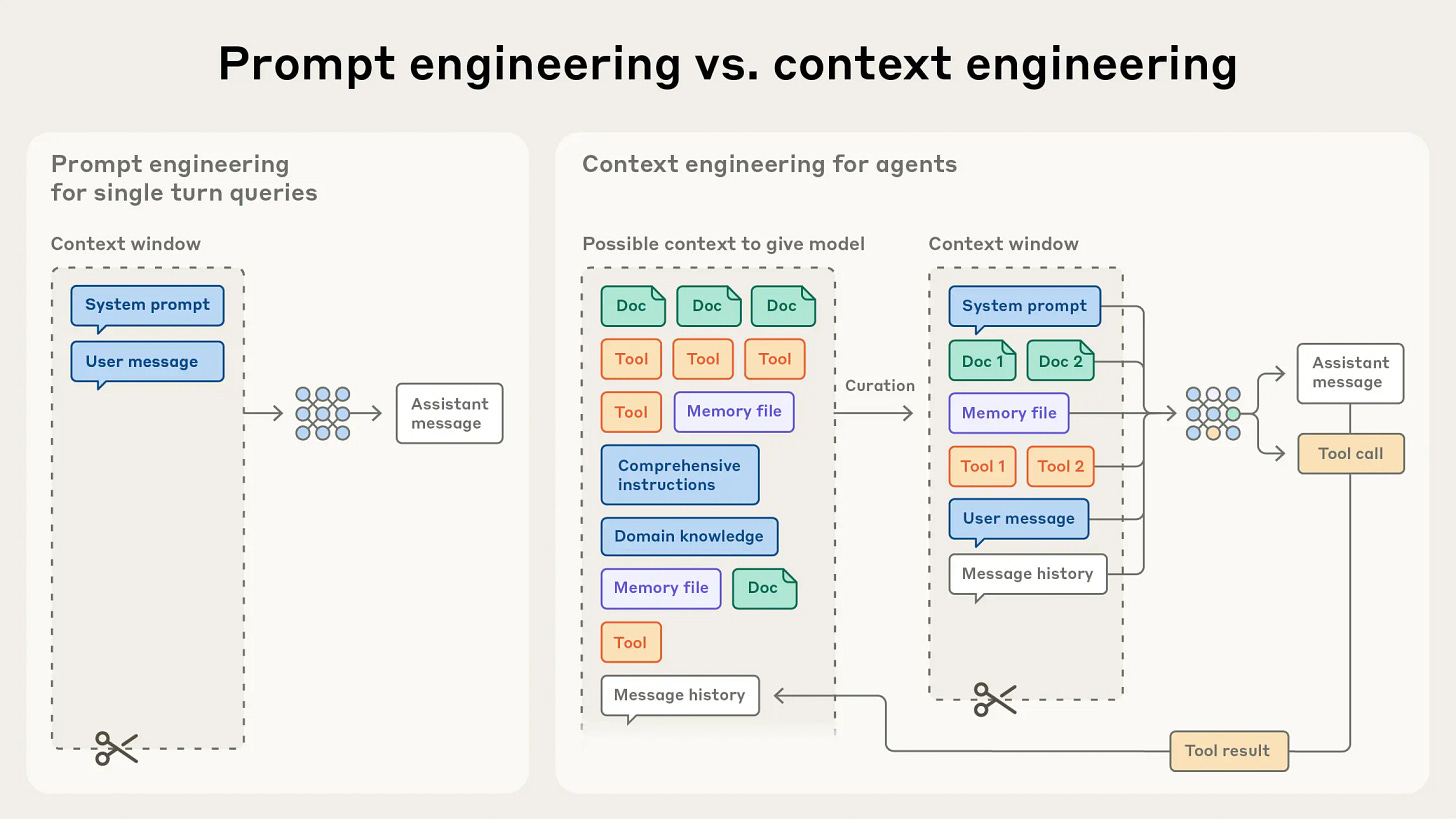

This table summarizes the difference, and this schema from this article about context engineering from Anthropic explains it in detail:

The Architecture of Context

Think of a modern LLM workflow as a layered system: not a single chat window.

System Layer → defines the agent’s identity and objectives

“You are a senior full-stack engineer helping refactor large React Native apps.”

Project Layer → connects the model to your codebase, docs, and issues

Load recent commits, open PRs, and related documentation.

Session Layer → maintains the thread of conversation and goals

“We’re focusing on optimizing Redux store size; ignore unrelated components.”

Prompt Layer → your live instruction

“Refactor the store using RTK Query.”

When these layers are orchestrated, the model behaves less like a chatbot and more like a collaborator.

I personally prefer to also add a progress file to check when the LLM do the changes, and why they did them. So when the session context Window is finished, at least it keeps track of the changes, and doesn’t start from scratch

. Also, with the rules file, it is easier to keep track of the code consistency.

Final Thoughts

Prompt engineering taught us how to talk to AI.

Context engineering teaches us how to work with it.

As context windows expand from 200K to 1M tokens, simply writing clever prompts won’t cut it.

The advantage belongs to developers who can feed the right context at the right time.

Anthropic, OpenAI, and Google are all racing to make models context-aware by design.

This means the real differentiator won’t be what you say to the AI, but how your system helps it remember, relate, and reason.

Context engineering is the new developer literacy: a bridge between AI systems thinking and software design.